Accelerate Pedestrian Detection with SIMD Code

This example shows how to perform automatic detection and tracking of pedestrians in a video from a moving camera. You can generate SIMD code using Intel™ AVX2 technology to increase the number of frames per second in the video. A higher frame rate improves the quality and speed of the detection and tracking system.

Download Pedestrian Detection Video

This example uses the CamVid dataset [1] from the University of Cambridge. This dataset is a collection of images and videos containing street-level views obtained while driving. Download the file from the MathWorks® website:

CAMVideoURL= matlab.internal.examples.downloadSupportFile('vision/data',... 'CamVid_0016E5.mp4');

Create and Configure a Monocular Camera Sensor to Detect Pedestrians

Create a monoCamera (Automated Driving Toolbox) object that holds the configuration information for a monocular camera sensor. This information includes camera intrinsics such as the focal point, optical center, and image size of the camera and camera extrinsics such as the height of the camera and yaw angle.

focalLength=[526.3158, 526.3158];

principalPoint = [319.5 179.5];

imageSize = [360 640];

intrinsics = cameraIntrinsics(focalLength,principalPoint,imageSize);

height = 1.2;

yaw = 13;

sensor = monoCamera(intrinsics,height,'Yaw',yaw);Create an acfObjectDetector (Computer Vision Toolbox) object for detecting upright people. Configure the detector for use with the camera.

detector = peopleDetectorACF('caltech');

pedWidth = [0.5, 1.5];

detectorMonoCam = configureDetectorMonoCamera(detector, sensor, pedWidth);To support code generation, the acfObjectDetector object must be in the form of a structure. Use the toStruct (Computer Vision Toolbox) function to create a structure that stores the properties of the input acfObjectDetector object in Classifier and TraininginOptions fields. Save the structure to a .mat file.

sModel = toStruct(detectorMonoCam); sModel.TrainingOptions.WindowStride = 4; sModel.TrainingOptions.NumScaleLevels = 8; save('model.mat','-struct','sModel','TrainingOptions','Classifier');

Examine detectandTrack Entry-Point Function

The detectandTrack.m file is the main entry-point function for code generation. The detectandTrack function loads the model.mat file that you just created and recreates an acfObjectDetector object to detect pedestrians within the input video.

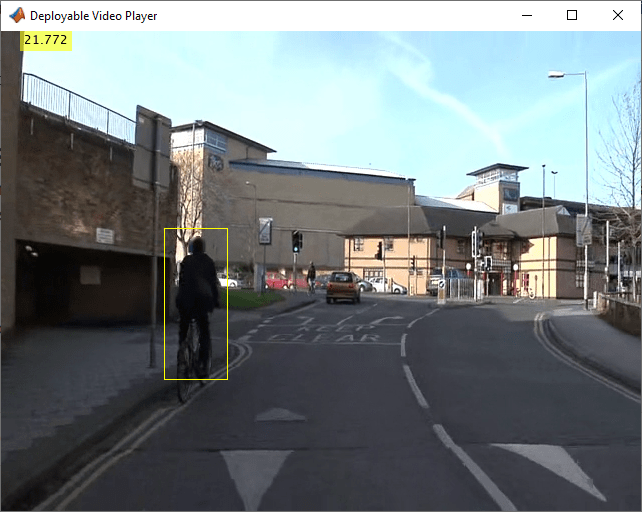

The detectandTrack function uses the vision.VideoFileReader (Computer Vision Toolbox) system object to read frames from the input video, calls the setupTracker and detectObjects functions, and uses the updateTracks function of the multiObjectTracker object to update the tracker with new detections. The detectandTrack function uses a vision.DeployableVideoPlayer (Computer Vision Toolbox) system object to display the pedestrian detection video output. The function prints the frames per second to the command window.

type detectAndTrack.mfunction detectAndTrack(CAMVideoURL)

%#codegen

% Create an ACF object detector for code generation.

model = coder.load('model.mat');

detector = acfObjectDetector(model.Classifier,model.TrainingOptions);

% Create a multi-object tracker to track multiple objects with Kalman filters.

[tracker, positionSelector] = setupTracker;

% Set-up the video reader and player.

videoFReader = vision.VideoFileReader(CAMVideoURL);

videoFWriter = vision.VideoFileWriter('CamVid_0016E5_result.avi', 'FrameRate', 25);

depVideoPlayer = vision.DeployableVideoPlayer;

sampleFrame = videoFReader();

frameSize = [size(sampleFrame,1) size(sampleFrame,2)];

% Generate the sample detection from the sample frame.

detectionThresh = 50;

detections = detectObjects(detector, sampleFrame, 0, detectionThresh);

costMatrix = zeros([0 numel(detections)]);

[confirmedTracks,~,allTracks] = updateTracks(tracker, detections, 0, costMatrix);

coder.varsize('allTracks');

currentStep = 0;

totalTime = 0;

while ~isDone(videoFReader)

% Update frame counters.

currentStep = currentStep + 1;

% Read the next frame.

frame = videoFReader();

tic;

% Detect the pedestrian every 2 frames.

detections = {};

if rem(currentStep,2)

detections = detectObjects(detector, frame, currentStep, detectionThresh);

end

% Track the pedestrian every frame.

costMatrix = detectionToTrackCost(allTracks, detections, positionSelector, tracker.AssignmentThreshold);

[confirmedTracks,~,allTracks] = updateTracks(tracker, detections, currentStep, costMatrix);

% Remove the tracks for people that are far away.

confirmedTracks = removeNoisyTracks(confirmedTracks, positionSelector, frameSize);

time = toc;

totalTime = totalTime+time;

% Insert tracking and frame rate annotations.

framerate = currentStep/totalTime;

frame = insertTrackBoxes(frame, confirmedTracks, positionSelector);

frame = insertText(frame, [20 20], framerate, 'AnchorPoint', 'LeftBottom');

% Write to the output file and display the annotated frame.

videoFWriter(frame);

depVideoPlayer(frame);

end

release(videoFWriter);

Examine Supporting Files

The setupTracker function creates a multiObjectTracker (Automated Driving Toolbox) to track multiple objects with Kalman filters. The outputs of setupTracker are:

tracker—ThemultiObjectTrackerthat is configured for this case.positionSelector—A matrix that specifies which elements of the State vector define the position:position = positionSelector * State

type setupTracker.mfunction [tracker, positionSelector] = setupTracker()

tracker = multiObjectTracker('FilterInitializationFcn', @initBboxFilter, ...

'AssignmentThreshold', 1, ...

'NumCoastingUpdates', 5, ...

'ConfirmationThreshold', [1 5], ...

'MaxNumTracks', 10, ...

'HasCostMatrixInput', true);

% Create a matrix that specifies which state vector elements are the position.

positionSelector = [1 0 0 0 0 0 0 0; ...

0 0 1 0 0 0 0 0; ...

0 0 0 0 1 0 0 0; ...

0 0 0 0 0 0 1 0];

end

The initBboxFilter function defines a Kalman filter to filter the bounding box measurement.

type initBboxFilter.mfunction filter = initBboxFilter(Detection)

% Step 1: Define the motion model and state.

% Use a constant velocity model for a bounding box on the image.

% The state is [x; vx; y; vy; w; wv; h; hv]

% The state transition matrix is:

% [1 dt 0 0 0 0 0 0;

% 0 1 0 0 0 0 0 0;

% 0 0 1 dt 0 0 0 0;

% 0 0 0 1 0 0 0 0;

% 0 0 0 0 1 dt 0 0;

% 0 0 0 0 0 1 0 0;

% 0 0 0 0 0 0 1 dt;

% 0 0 0 0 0 0 0 1]

% Assume dt = 1. This example does not consider a time-variant transition

% model for the linear Kalman filter.

dt = 1;

cvel =[1 dt; 0 1];

A = blkdiag(cvel, cvel, cvel, cvel);

% Step 2: Define the process noise.

% The process noise represents the parts of the process that the model

% does not take into account. For example, in a constant velocity model,

% the acceleration is neglected.

G1d = [dt^2/2; dt];

Q1d = G1d*G1d';

Q = blkdiag(Q1d, Q1d, Q1d, Q1d);

% Step 3: Define the measurement model.

% Only the position ([x;y;w;h]) is measured.

% The measurement model is

H = [1 0 0 0 0 0 0 0; ...

0 0 1 0 0 0 0 0; ...

0 0 0 0 1 0 0 0; ...

0 0 0 0 0 0 1 0];

% Step 4: Map the sensor measurements to an initial state vector.

% Because there is no measurement of the velocity, the v components are

% initialized to 0:

state = [Detection.Measurement(1); ...

0; ...

Detection.Measurement(2); ...

0; ...

Detection.Measurement(3); ...

0; ...

Detection.Measurement(4); ...

0];

% Step 5: Map the sensor measurement noise to a state covariance.

% For the parts of the state that the sensor measures directly, use the

% corresponding measurement noise components. For the parts that the

% sensor does not measure, assume a large initial state covariance. That way,

% future detections can be assigned to the track.

L = 100; % Large value

stateCov = diag([Detection.MeasurementNoise(1,1), ...

L, ...

Detection.MeasurementNoise(2,2), ...

L, ...

Detection.MeasurementNoise(3,3), ...

L, ...

Detection.MeasurementNoise(4,4), ...

L]);

% Step 6: Create the correct filter.

% In this example, all the models are linear, so use trackingKF as the

% tracking filter.

filter = trackingKF(...

'StateTransitionModel', A, ...

'MeasurementModel', H, ...

'State', state, ...

'StateCovariance', stateCov, ...

'MeasurementNoise', Detection.MeasurementNoise, ...

'ProcessNoise', Q);

end

The detectObjects function detects pedestrians in an image.

type detectObjectsfunction detections = detectObjects(detector, frame, frameCount, thresh)

% Run the detector and return a list of bounding boxes: [x, y, w, h]

[bboxes_raw, scores] = detect(detector, frame);

numDetections = sum(scores>thresh);

bboxes = bboxes_raw(scores>thresh, :);

% Define the measurement noise.

L = 100;

measurementNoise = [L 0 0 0; ...

0 L 0 0; ...

0 0 L/2 0; ...

0 0 0 L/2];

% Formulate the detections as a list of objectDetection reports.

detections = cell(numDetections, 1);

objAttr = struct('VisionID', uint32(0), 'Size', zeros(1,3,'single'), ...

'RadarID', uint16(0), 'Status', uint8(0), 'Amplitude', single(0), ...

'RangeMode', uint8(0));

for i = 1:numDetections

detections{i} = objectDetection(frameCount, bboxes(i, :), ...

'MeasurementNoise', measurementNoise, 'ObjectAttributes', {objAttr});

end

end

The removeNoisyTracks function removes noisy tracks. A track is noisy if its predicted bounding box is too small. Typically, this implies the pedestrian is far away.

type removeNoisyTracks.mfunction tracks = removeNoisyTracks(tracks, positionSelector, imageSize)

if isempty(tracks)

return

end

% Extract the positions from all the tracks.

positions = getTrackPositions(tracks, positionSelector);

% The track is 'invalid' if the predicted position is about to move out

% of the image, or the bounding box is too small.

invalid = ( positions(:, 1) < 1 | ...

positions(:, 1) + positions(:, 3) > imageSize(2) | ...

positions(:, 3) <= 5 | ...

positions(:, 4) <= 10 );

tracks(invalid) = [];

end

The insertTrackBoxes function inserts bounding boxes in an image.

type insertTrackBoxes.mfunction I = insertTrackBoxes(I, tracks, positionSelector)

if isempty(tracks)

return

end

bboxes = getTrackPositions(tracks, positionSelector);

for i=1:size(bboxes,1)

box = bboxes(i, :);

I = insertShape(I,'Rectangle',int32(box));

end

end

Configure Code Generation Configuration Object

To generate a standalone executable for the detectandTrack entry-point function, use the coder.config function to create a coder.EmbeddedCodeConfig object for an exe target. This object contains the configuration parameters that the codegen function uses for generating an executable program with Embedded Coder™.

ecfg = coder.config('exe');Specify an example main C function that the code generator compiles to create a test executable.

ecfg.GenerateExampleMain = 'GenerateCodeAndCompile';Optimize the build for faster running executables and specify that the code generator does not produce code to handle integer overflow and produces code to support nonfinite values (Inf and Nan) only if they are used.

ecfg.BuildConfiguration = 'Faster Runs';

ecfg.SaturateOnIntegerOverflow = false;

ecfg.SupportNonFinite = true;Allocate memory dynamically on the heap for variable-size arrays whose size (in bytes) is greater than or equal to the value of the DynamicMemoryAllocationThreshold parameters.

ecfg.EnableDynamicMemoryAllocation = true; ecfg.DynamicMemoryAllocationThreshold = 2e8;

Because this example generates code from Automated Driving Toolbox™ and Computer Vision Toolbox™ functions, the generated code must be portable and not rely on 3rd party libraries. To generate portable code that you can retarget for an Intel device, create a coder.HardwareImplementation object and specify a nonhost target. Then, configure the production hardware settings to match those of an Intel device.

ecfg.HardwareImplementation.ProdHWDeviceType = "Generic->Custom"; ecfg.HardwareImplementation.ProdBitPerLong = 64; ecfg.HardwareImplementation.ProdBitPerPointer = 64; ecfg.HardwareImplementation.ProdBitPerPtrDiffT = 64; ecfg.HardwareImplementation.ProdBitPerSizeT = 64; ecfg.HardwareImplementation.ProdEndianess = "LittleEndian"; ecfg.HardwareImplementation.ProdIntDivRoundTo = "Zero"; ecfg.HardwareImplementation.ProdLargestAtomicFloat = "Float"; ecfg.HardwareImplementation.ProdWordSize = 64;

For some Image Processing Toolbox™ functions, the code generator uses the OpenMP application interface to support shared-memory, multicore code generation. To achieve the highest frame rate and avoid inefficiencies due to the processor trying to use too many threads, consider specifying a maximum number of threads to run parallel for-Loops in the generated code. To do so, set the OpenMP environment variable, OMP_NUM_THREADS, to a number less than or equal to the number of cores in your processor. For more information, see https://www.openmp.org/specifications/. This example sets this variable to 4.

setenv('OMP_NUM_THREADS','4')

Generate Non-SIMD Code

inputs = coder.Constant(CAMVideoURL);

evalc('codegen -args inputs -config ecfg detectAndTrack.m');Run the executable and observe the frame rate at the top left of the video. This example runs the executable on Windows. To run the executable on Linux, change the command to !./detectAndTrack.

!detectAndTrack.exe

Generate SIMD Code

Configure the code generation configuration object to generate SIMD code using AVX2 technology.

ecfg.InstructionSetExtensions = "AVX2";Generate code.

evalc('codegen -args inputs -config ecfg detectAndTrack.m');Run the executable and observe the higher frame rate.

!detectAndTrack.exe

References

[1] Brostow, Gabriel J., Julien Fauqueur, and Roberto Cipolla. "Semantic object classes in video: A high-definition ground truth database." Pattern Recognition Letters 30.2 (2009): 88-97.