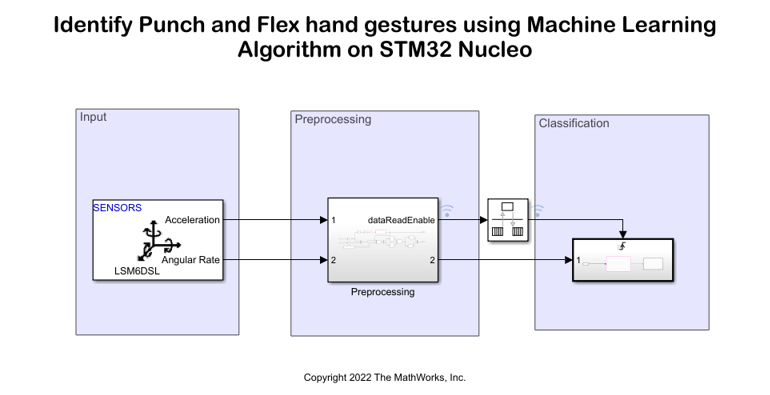

Identify Punch and Flex Hand Gestures Using Machine Learning Algorithm on STMicroelectronics Nucleo Boards

This example shows how to use the Simulink® Coder Support Package for STMicroelectronics® Nucleo boards to identify punch and flex hand gestures using a machine learning algorithm. The example is deployed on an STM Nucleo board that uses an external sensor (LSM6DSL) available in sensor shield (X-NUCLEO-IKS01A2) to identify the hand gestures. The output from the machine learning algorithm, after identifying whether a hand gesture is a punch or a flex, is transmitted to the serial port where 0 represents a punch and 1 represents a flex.

Prerequisites

For more information on how to run a Simulink model on an STM Nucleo board, see Setup and Configuration.

For more information on machine learning, see Get Started with Statistics and Machine Learning Toolbox.

Required Hardware

STMicroelectronics Nucleo board

LSM6DSL IMU sensor in X-NUCLEO-IKS01A2 Sensor shield

USB cable

Hardware Setup

Connect the STMicroelectronics board to the host computer using the USB cable.

Connect the LSM6DSL IMU sensor to pin D14 (SDA) and D15 (SCL) on the Nucleo board to communicate over I2C.

Prepare Data Set for Training Machine Learning Algorithm

This example uses a MATLAB® code file named STM32CaptureTrainingData to measure the raw data for the flex and punch hand gestures. Open the file and configure these parameters.

Specify the acceleration threshold in the

accelerationThresholdparameter. In this example, the threshold is set to2.5.Create an

LSM6DS3object and specify the number of samples read in a single execution of the read function. In this example, the parameter is set to119.Specify the number of frames to be captured per gesture in the while loop. In this example,

100frames are captured per gesture.

To capture the flex hand gestures, run this command in the MATLAB Command Window.

flex = STM32CaptureTrainingData;

Hold the Nucleo board in the palm of your hand and throw a flex. To create a data set of 100 frames for a flex gesture, throw your hand for a flex 100 times. Observe the value of the Gesture no. being incremented every time you throw a flex in the MATLAB Command Window.

A 1-by-100 flex hand gesture data samples are available in Workspace that are read by the LSM6DS3 IMU sensor. Right-click flex in Workspace and save it as FlexDataset.mat in the same working directory of the example.

Follow the same procedure to create a data set of 100 frames for a punch gesture.

To capture the punch hand gestures, run this command in the MATLAB Command Window.

punch = STM32CaptureTrainingData;

Hold the Nucleo board in the palm of your hand and throw a punch. To create a data set of 100 frames for a punch gesture, throw your hand for a punch 100 times. Observe the value of the Gesture no. being incremented every time you throw a punch in the MATLAB Command Window.

A 1-by-100 punch hand gesture data samples are available in Workspace that are read by the LSM6DS3 IMU sensor. Right-click punch in Workspace and save it as punch_100.mat in the same working directory of the example.

When you create your own data set for the flex and punch hand gestures, use the same names for the MAT file in the STM32grScript MATLAB code file.

The STM32grScript MATLAB code file is used to preprocess and train the data set for flex and punch hand gestures, train the machine learning algorithm with the data set, and evaluate its performance to accurately predict these hand gestures.

To edit the STM32grScript.m file, run this command in the MATLAB® Command Window.

edit STM32grScript;

Use this MATLAB code file to prepare the Simulink model in the support package and then deploy the model on the Nucleo board.

Alternatively, you can also load the flex and the punch data set from STM32grScript available in MATLAB.

load FlexDataset.mat load PunchDataset.mat

To train and test the machine learning algorithm, 119 data samples are read from the accelerometer and the gyroscope. These 119 samples are grouped into 100 such frames, where each frame represents a hand gesture. Each frame has six values that are obtained from the X, Y, and Z axes of the accelerometer and the gyroscope, respectively. A total of 11,900 such observations are stored in the data set for two different hand gestures, flex and punch.

Extract Features

Features are extracted by taking the mean and the standard deviation of each column in a frame. This results in a 100-by-12 matrix of observations for each gesture.

f1 = cellfun(@mean,flex','UniformOutput',false); f2 = cellfun(@std,flex','UniformOutput',false); flexObs = cell2mat([f1,f2]); flexLabel = ones(100,1);

p1 = cellfun(@mean,punch','UniformOutput',false); p2 = cellfun(@std,punch','UniformOutput',false); punchObs = cell2mat([p1,p2]); punchLabel = 2*ones(100,1);

X = [flexObs;punchObs]; Y = [flexLabel;punchLabel];

Prepare Data

This example uses 80% of the observations to train a model that classifies two types of hand gestures and 20% of the observations to validate the trained model. Use cvpartition to specify a 20% holdout for the test data set.

rng('default') % For reproducibility

Partition = cvpartition(Y,'Holdout',0.2);

trainingInds = training(Partition); % Indices for the training set

XTrain = X(trainingInds,:);

YTrain = Y(trainingInds);

testInds = test(Partition); % Indices for the test set

XTest = X(testInds,:);

YTest = Y(testInds);Train Decision Tree at Command Line

Train a classification model.

treeMdl = fitctree(XTrain,YTrain);

Perform a fivefold cross-validation for classificationEnsemble and compute the validation accuracy.

partitionedModel = crossval(treeMdl,'KFold',5); validationAccuracy = 1-kfoldLoss(partitionedModel);

ans =

validationAccuracy = 1

Evaluate Performance on Test Data

Evaluate performance on the test data set.

testAccuracy = 1-loss(treeMdl,XTest,YTest)

ans =

testAccuracy = 1

The trained model accurately classifies 100% of the hand gestures on the test data set. This result confirms that the trained model does not overfit the training data set.

Prepare Simulink Model and Calibrate Parameters

After you have prepared a classification model, use the STM32grScript.m file as a Simulink model initialization function.

To open the Simulink model, run this command in the MATLAB Command Window.

open_system('MachineLearningPrediction')

The external LSM6DSL IMU sensor in X-NUCLEO-IKS01A2 Sensor shield that is attached to the Nucleo board measures linear acceleration and angular velocity along the X, Y, and Z axes. Configure these parameters in the Block Parameters dialog box of the LSM6DSL IMU Sensor block:

Set the I2C address of the sensor to

0x6Bto communicate with the accelerometer and gyroscope peripherals of the sensor.Select the Acceleration (m/s^2) and Angular velocity (rad/s) output ports.

Set the Sample time to

0.01.

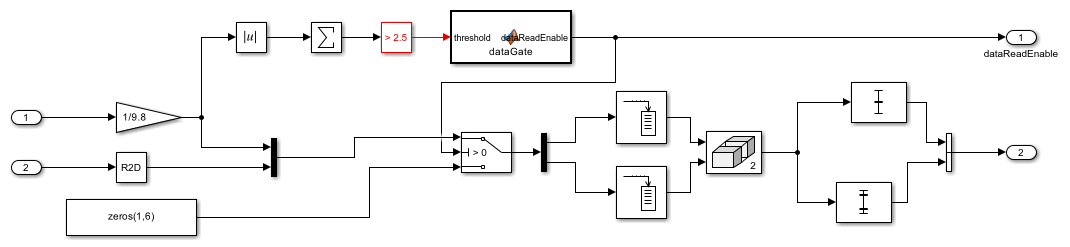

The 1-by-3 acceleration and angular velocity vector data is collected from the LSM6DS3 IMU sensor at the sample time you specify in the Block Parameters dialog box. This data is then preprocessed in the Preprocessing subsystem.

To open the subsystem, run this command in the MATLAB Command Window.

open_system('MachineLearningPrediction/Preprocessing')

The acceleration data is first converted from m/s^2 to g. The absolute values are then summed up and for every 119 data values that are greater than a threshold of 2.5g, the dataReadEnable parameter in the MATLAB Function block becomes logically true. This acts as a trigger to the Triggered subsystem in the Classification area.

The angular velocity data is converted from radians to degrees. The acceleration and angular velocity data is multiplexed and given as an input to the Switch. For a data value greater than 0, the buffer stores the valid 119 gesture values corresponding to a punch and a flex. For data values less than zero, which indicates that no hand gesture is detected, a series of (1,6) zeros are sent to the output to match the combined acceleration and angular velocity data.

Configure this parameter in the Block Parameters dialog box of the Buffer block.

Set the Output buffer size parameter to

119.

Features are extracted by calculating the mean and the standard deviation values of each column in a frame that results in a 100-by-12 matrix of observations for each gesture. These extracted features are further passed as an input to the Triggered subsystem in the Classification area.

To open the subsystem, run this command in the MATLAB Command Window.

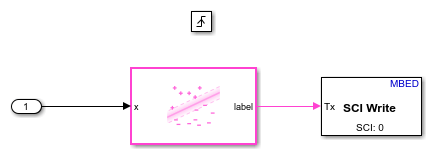

open_system('MachineLearningPrediction/Triggered Subsystem')

The Rate Transition block transfers data from the output of the Preprocessing subsystem operating at one rate to the input of the Triggered subsystem operating at a different rate.

The ClassificationTree Predict block is a library block from the Statistics and Machine Learning Toolbox™ that classifies the gestures using the extracted features. This block uses the treeMdl machine learning model to identify punches and flexes. This block outputs the predicted class label. The output is either a 0 or 1 corresponding to a punch or a flex, respectively.

The Serial Transmit block parameters are configured to their default values.

Deploy Simulink Model on Nucleo Board

1. On the Hardware tab of the Simulink model, in the Mode section, select Run on board and then click Build, Deploy & Start.

2. For easy analysis of the hand gesture data being recognized by the machine learning algorithm, run the following script in the MATLAB Command Window and read the data on the Nucleo board serial port.

device = serialport(<com_port>,9600);

while(true)

rxData = read(device,1,"double");

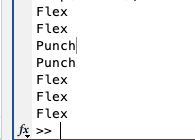

if rxData==0

disp('Punch');

elseif rxData==1

disp('Flex');

end

endReplace the port parameter with the actual com port of the Nucleo board. The gesture detected by the machine learning algorithm is displayed on the Nucleo board serial port at the baud rate of 9600 where 0 represents a punch and 1 represents a flex.

3. Hold the hardware in the palm of your hand and throw a punch or a flex. Observe the output in the MATLAB Command Window.

Migrate to Embedded Coder® Support Package for STMicroelectronics® STM32 Processors

To migrate to the Embedded Coder® Support Package for STMicroelectronics® STM32 Processors, use:

Identify Punch and Flex Hand Gestures Using Machine Learning Algorithm on STMicroelectronics STM32 Processor Boards (Embedded Coder) example to implement the equivalent STM32 processor support package.

Migrate SCI Block Usage to STM32 Processor Based Library Block (Embedded Coder) topic to migrate block usage.