estimateMAP

Class: HamiltonianSampler

Estimate maximum of log probability density

Syntax

xhat = estimateMAP(smp)

[xhat,fitinfo]

= estimateMAP(smp)

[xhat,fitinfo]

= estimateMAP(___,Name,Value)

Description

xhat = estimateMAP(smp)smp.

[ returns additional

fitting information in xhat,fitinfo]

= estimateMAP(smp)fitinfo.

[ specifies

additional options using one or more name-value pair arguments. Specify

name-value pair arguments after all other input arguments.xhat,fitinfo]

= estimateMAP(___,Name,Value)

Input Arguments

Name-Value Arguments

Output Arguments

Examples

Tips

First create a Hamiltonian Monte Carlo sampler using the

hmcSamplerfunction, and then useestimateMAPto estimate the MAP point.After creating an HMC sampler, you can tune the sampler, draw samples, and check convergence diagnostics using the other methods of the

HamiltonianSamplerclass. Using the MAP estimate as a starting point in thetuneSampleranddrawSamlesmethods can lead to more efficient tuning and sampling. For an example of this workflow, see Bayesian Linear Regression Using Hamiltonian Monte Carlo.

Algorithms

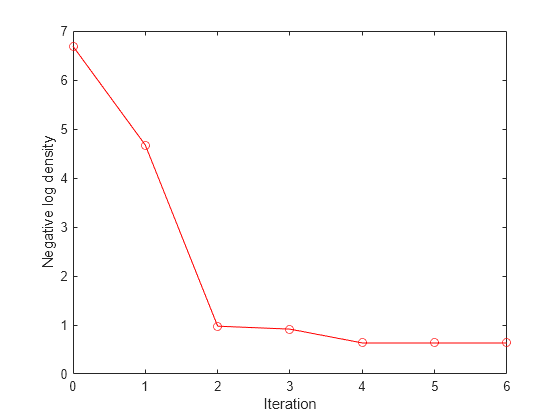

estimateMAPuses a limited memory Broyden-Fletcher-Goldfarb-Shanno (LBFGS) quasi-Newton optimizer to search for the maximum of the log probability density. See Nocedal and Wright [1].

References

[1] Nocedal, J. and S. J. Wright. Numerical Optimization, Second Edition. Springer Series in Operations Research, Springer Verlag, 2006.

Version History

Introduced in R2017a