verifyNetworkRobustness

Syntax

Description

result = verifyNetworkRobustness(net,XLower,XUpper,label)net is adversarially robust with respect to

the class label when the input is between XLower and

XUpper. For more information, see Adversarial Examples.

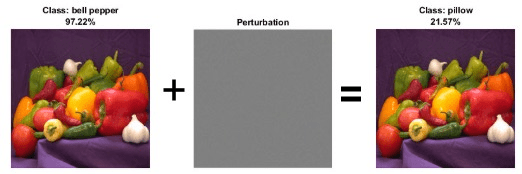

A network is robust to adversarial examples for a specific input if the predicted class

does not change when the input is perturbed between XLower and

XUpper. For more information, see Algorithms.

The verifyNetworkRobustness function requires the Deep Learning Toolbox Verification Library support package. If this support package is not installed, use the

Add-On Explorer. To open the Add-On Explorer, go

to the MATLAB® Toolstrip and click Add-Ons > Get Add-Ons.

result = verifyNetworkRobustness(___,Name=Value)

Examples

Input Arguments

Name-Value Arguments

Output Arguments

More About

Algorithms

To verify the robustness of a network for an input, the function checks that when the input is perturbed between the specified lower and upper bound, the output does not significantly change.

Let X be an input with respect to which you want to test the robustness

of the network. To use the verifyNetworkRobustness function, you must

specify a lower and upper bound for the input. For example, let be a small perturbation. You can define a lower and upper bound for the input

as and , respectively.

To verify the adversarial robustness of the network, the function checks that, for all inputs between Xlower and Xupper, no adversarial example exists. To check for adversarial examples, the function uses these steps.

Create an input set using the lower and upper input bounds.

Pass the input set through the network and return an output set. To reduce computational overhead, the function performs abstract interpretation by approximating the output of each layer using the DeepPoly [2] method.

Check if the specified label remains the same for the entire input set. Because the algorithm uses overapproximation when it computes the output set, the result can be unproven if part of the output set corresponds to an adversarial example.

If you specify multiple pairs of input lower and upper bounds, then the function verifies the robustness for each pair of input bounds.

Note

Soundness with respect to floating point: In rare cases, floating-point rounding errors can accumulate which can cause the network output to be outside the computed bounds and the verification results to be different. This can also be true when working with networks you produced using C/C++ code generation.

References

[1] Goodfellow, Ian J., Jonathon Shlens, and Christian Szegedy. “Explaining and Harnessing Adversarial Examples.” Preprint, submitted March 20, 2015. https://arxiv.org/abs/1412.6572.

[2] Singh, Gagandeep, Timon Gehr, Markus Püschel, and Martin Vechev. “An Abstract Domain for Certifying Neural Networks”. Proceedings of the ACM on Programming Languages 3, no. POPL (January 2, 2019): 1–30. https://dl.acm.org/doi/10.1145/3290354.