Define Custom Deep Learning Layer with Formatted Inputs

If Deep Learning Toolbox™ does not provide the layer you require for your task, then you can define your own custom layer using this example as a guide. For a list of built-in layers, see List of Deep Learning Layers.

To define a custom deep learning layer, you can use the template provided in this example, which takes you through these steps:

Name the layer — Give the layer a name so that you can use it in MATLAB®.

Declare the layer properties — Specify the properties of the layer, including learnable parameters and state parameters.

Create the constructor function (optional) — Specify how to construct the layer and initialize its properties. If you do not specify a constructor function, then at creation, the software initializes the

Name,Description, andTypeproperties with[]and sets the number of layer inputs and outputs to1.Create initialize function (optional) — Specify how to initialize the learnable and state parameters when the software initializes the network. If you do not specify an initialize function, then the software does not initialize parameters when it initializes the network.

Create forward functions — Specify how data passes forward through the layer (forward propagation) at prediction time and at training time.

Create reset state function (optional) — Specify how to reset state parameters.

Create a backward function (optional) — Specify the derivatives of the loss with respect to the input data and the learnable parameters (backward propagation). If you do not specify a backward function, then the forward functions must support

dlarrayobjects.

When you define the layer functions, you can use dlarray objects.

Using dlarray objects makes working with high

dimensional data easier by allowing you to label the dimensions. For example, you can label

which dimensions correspond to spatial, time, channel, and batch dimensions using the

"S", "T", "C", and

"B" labels, respectively. For unspecified and other dimensions, use the

"U" label. For dlarray object functions that operate

over particular dimensions, you can specify the dimension labels by formatting the

dlarray object directly, or by using the DataFormat

option.

Using formatted dlarray objects in layers functions also allows you to define

layers where the inputs and outputs have different formats, such as layers that

permute, add, or remove dimensions. For example, you can define a layer that takes

as input a mini-batch of images with the format "SSCB" (spatial,

spatial, channel, batch) and output a mini-batch of sequences with the format

"CBT" (channel, batch, time). Using formatted

dlarray objects also allows you to define layers that can

operate on data with different input formats, for example, layers that support

inputs with the formats "SSCB" (spatial, spatial, channel, batch)

and "CBT" (channel, batch, time).

dlarray objects also enable support for automatic differentiation.

Consequently, if your forward functions fully support dlarray objects, then

defining the backward function is optional.

This example shows how to create a project and reshape layer, which

is a layer commonly used in generative adversarial networks (GANs) that takes an array of

noise with format "CB" (channel, batch) and projects and reshapes it to a

mini-batch of images with format "SSCB" (spatial, spatial, channel,

batch) using fully connected, reshape, and relabel operations.

Custom Layer Template

Copy the custom layer template into a new file in MATLAB. This template gives the structure of a layer class definition. It outlines:

The optional

propertiesblocks for the layer properties, learnable parameters, and state parameters.The optional layer constructor function.

The optional

initializefunction.The

predictfunction and the optionalforwardfunction.The optional

resetStatefunction for layers with state properties.The optional

backwardfunction.

classdef myLayer < nnet.layer.Layer % ... % & nnet.layer.Formattable ... % (Optional) % & nnet.layer.Acceleratable % (Optional) properties % (Optional) Layer properties. % Declare layer properties here. end properties (Learnable) % (Optional) Layer learnable parameters. % Declare learnable parameters here. end properties (State) % (Optional) Layer state parameters. % Declare state parameters here. end properties (Learnable, State) % (Optional) Nested dlnetwork objects with both learnable % parameters and state parameters. % Declare nested networks with learnable and state parameters here. end methods function layer = myLayer() % (Optional) Create a myLayer. % This function must have the same name as the class. % Define layer constructor function here. end function layer = initialize(layer,layout) % (Optional) Initialize layer learnable and state parameters. % % Inputs: % layer - Layer to initialize % layout - Data layout, specified as a networkDataLayout % object % % Outputs: % layer - Initialized layer % % - For layers with multiple inputs, replace layout with % layout1,...,layoutN, where N is the number of inputs. % Define layer initialization function here. end function [Y,state] = predict(layer,X) % Forward input data through the layer at prediction time and % output the result and updated state. % % Inputs: % layer - Layer to forward propagate through % X - Input data % Outputs: % Y - Output of layer forward function % state - (Optional) Updated layer state % % - For layers with multiple inputs, replace X with X1,...,XN, % where N is the number of inputs. % - For layers with multiple outputs, replace Y with % Y1,...,YM, where M is the number of outputs. % - For layers with multiple state parameters, replace state % with state1,...,stateK, where K is the number of state % parameters. % Define layer predict function here. end function [Y,state,memory] = forward(layer,X) % (Optional) Forward input data through the layer at training % time and output the result, the updated state, and a memory % value. % % Inputs: % layer - Layer to forward propagate through % X - Layer input data % Outputs: % Y - Output of layer forward function % state - (Optional) Updated layer state % memory - (Optional) Memory value for custom backward % function % % - For layers with multiple inputs, replace X with X1,...,XN, % where N is the number of inputs. % - For layers with multiple outputs, replace Y with % Y1,...,YM, where M is the number of outputs. % - For layers with multiple state parameters, replace state % with state1,...,stateK, where K is the number of state % parameters. % Define layer forward function here. end function layer = resetState(layer) % (Optional) Reset layer state. % Define reset state function here. end function [dLdX,dLdW,dLdSin] = backward(layer,X,Y,dLdY,dLdSout,memory) % (Optional) Backward propagate the derivative of the loss % function through the layer. % % Inputs: % layer - Layer to backward propagate through % X - Layer input data % Y - Layer output data % dLdY - Derivative of loss with respect to layer % output % dLdSout - (Optional) Derivative of loss with respect % to state output % memory - Memory value from forward function % Outputs: % dLdX - Derivative of loss with respect to layer input % dLdW - (Optional) Derivative of loss with respect to % learnable parameter % dLdSin - (Optional) Derivative of loss with respect to % state input % % - For layers with state parameters, the backward syntax must % include both dLdSout and dLdSin, or neither. % - For layers with multiple inputs, replace X and dLdX with % X1,...,XN and dLdX1,...,dLdXN, respectively, where N is % the number of inputs. % - For layers with multiple outputs, replace Y and dLdY with % Y1,...,YM and dLdY,...,dLdYM, respectively, where M is the % number of outputs. % - For layers with multiple learnable parameters, replace % dLdW with dLdW1,...,dLdWP, where P is the number of % learnable parameters. % - For layers with multiple state parameters, replace dLdSin % and dLdSout with dLdSin1,...,dLdSinK and % dLdSout1,...,dldSoutK, respectively, where K is the number % of state parameters. % Define layer backward function here. end end end

Name Layer and Specify Superclasses

First, give the layer a name. In the first line of the class file, replace the

existing name myLayer with

projectAndReshapeLayer.

classdef projectAndReshapeLayer < nnet.layer.Layer % ... % & nnet.layer.Formattable ... % (Optional) % & nnet.layer.Acceleratable % (Optional) ... end

You can specify how to processes formatted and unformatted data that the software passes

to and from the layer by opting to inherit from the

nnet.layer.Formattable class. This table describes how the software

processes formatted and unformatted data in custom layers.

| Layer Definition | Input Data Processing | Output Data Processing |

|---|---|---|

Inherits from nnet.layer.Formattable |

The software passes the layer input to the layer function directly:

| The software passes the layer function outputs to subsequent layers directly:

Warning For custom layers that inherit from

|

Does not inherit from

nnet.layer.Formattable | The software removes the formats from any formatted inputs and passes the unformatted data to the layer function:

|

The output data must be unformatted. The software applies the formats of the layer inputs to any unformatted layer function outputs, and passes the result to subsequent layers:

|

Because a project and reshape layer outputs data with different dimensions as the

input data, that is, it outputs data with added spatial dimensions, the layer must also

inherit from nnet.layer.Formattable. This enables the layer to

receive and output formatted dlarray objects.

Next, specify to inherit from both the nnet.layer.Layer and

nnet.layer.Formattable superclasses. The layer functions also

support acceleration, so also inherit from nnet.layer.Acceleratable.

For more information about accelerating custom layer functions, see Custom Layer Function Acceleration.

classdef projectAndReshapeLayer < nnet.layer.Layer ... & nnet.layer.Formattable ... & nnet.layer.Acceleratable ... end

Next, rename the myLayer constructor function (the first function

in the methods section) so that it has the same name as the

layer.

methods function layer = projectAndReshapeLayer() ... end ... end

Save the Layer

Save the layer class file in a new file named

projectAndReshapeLayer.m. The file name must match the layer

name. To use the layer, you must save the file in the current folder or in a folder

on the MATLAB path.

Declare Properties and Learnable Parameters

Declare the layer properties in the properties section and declare

learnable parameters by listing them in the properties (Learnable)

section.

By default, custom layers have these properties. Do not declare these properties in the

properties section.

| Property | Description |

|---|---|

Name | Layer name, specified as a character vector or a string scalar.

For Layer array input, the trainnet and

dlnetwork functions automatically assign

names to unnamed layers. |

Description | One-line description of the layer, specified as a string scalar or a character vector. This

description appears when you display a If you do not specify a layer description, then the software displays the layer class name. |

Type | Type of the layer, specified as a character vector or a string scalar. The value of If you do not specify a layer type, then the software displays the layer class name. |

NumInputs | Number of inputs of the layer, specified as a positive integer. If

you do not specify this value, then the software automatically sets

NumInputs to the number of names in

InputNames. The default value is 1. |

InputNames | Input names of the layer, specified as a cell array of character

vectors. If you do not specify this value and

NumInputs is greater than 1, then the software

automatically sets InputNames to

{'in1',...,'inN'}, where N is

equal to NumInputs. The default value is

{'in'}. |

NumOutputs | Number of outputs of the layer, specified as a positive integer. If

you do not specify this value, then the software automatically sets

NumOutputs to the number of names in

OutputNames. The default value is 1. |

OutputNames | Output names of the layer, specified as a cell array of character

vectors. If you do not specify this value and

NumOutputs is greater than 1, then the software

automatically sets OutputNames to

{'out1',...,'outM'}, where M

is equal to NumOutputs. The default value is

{'out'}. |

If the layer has no other properties, then you can omit the properties

section.

Tip

If you are creating a layer with multiple inputs, then you must

set either the NumInputs or InputNames properties in the

layer constructor. If you are creating a layer with multiple outputs, then you must set either

the NumOutputs or OutputNames properties in the layer

constructor. For an example, see Define Custom Deep Learning Layer with Multiple Inputs.

A project and reshape layer requires an additional property that holds the layer

output size. Specify a single property with name OutputSize in the

properties section.

properties

% Output size

OutputSize

endA project and reshape layer has two learnable parameters: the weights and the biases

of the fully connect operation. Declare these learnable parameter in the

properties (Learnable) section and call the parameters

Weights and Bias, respectively.

properties (Learnable)

% Layer learnable parameters

Weights

Bias

endCreate Constructor Function

Create the function that constructs the layer and initializes the layer properties. Specify any variables required to create the layer as inputs to the constructor function.

The project and reshape layer constructor function requires one input argument that specifies the layer output size and one optional input argument that specifies the layer name.

In the constructor function projectAndReshapeLayer, specify the

required input argument named outputSize and the optional arguments

as name-value arguments with the name NameValueArgs. Add a comment to

the top of the function that explains the syntax of the function.

function layer = projectAndReshapeLayer(outputSize,NameValueArgs) % layer = projectAndReshapeLayer(outputSize) creates a % projectAndReshapeLayer object that projects and reshapes the % input to the specified output size. % % layer = projectAndReshapeLayer(outputSize,Name=name) also % specifies the layer name. ... end

Parse Input Arguments

Parse the input arguments using an arguments block. List the

arguments in the same order as the function syntax and specify the default values.

Then, extract the values from the NameValueArgs

input.

% Parse input arguments. arguments outputSize NameValueArgs.Name = '' end name = NameValueArgs.Name;

Initialize Layer Properties

Initialize the layer properties in the constructor function. Replace the comment % Layer constructor function goes here with code that initializes the layer properties. Do not initialize learnable or state parameters in the constructor function, initialize them in the initialize function instead.

Set the Name property to the input argument

name.

% Set layer name.

layer.Name = name;Give the layer a one-line description by setting the

Description property of the layer. Set the description to

describe the type of layer and its size.

% Set layer description. layer.Description = "Project and reshape layer with output size " + join(string(outputSize));

Specify the type of the layer by setting the Type property. The

value of Type appears when the layer is displayed in a

Layer array.

% Set layer type. layer.Type = "Project and Reshape";

Set the layer property OutputSize to the specified input

value.

% Set output size.

layer.OutputSize = outputSize;View the completed constructor function.

function layer = projectAndReshapeLayer(outputSize,NameValueArgs)

% layer = projectAndReshapeLayer(outputSize)

% creates a projectAndReshapeLayer object that projects and

% reshapes the input to the specified output size.

%

% layer = projectAndReshapeLayer(outputSize,Name=name)

% also specifies the layer name.

% Parse input arguments.

arguments

outputSize

NameValueArgs.Name = '';

end

name = NameValueArgs.Name;

% Set layer name.

layer.Name = name;

% Set layer description.

layer.Description = "Project and reshape layer with output size " + join(string(outputSize));

% Set layer type.

layer.Type = "Project and Reshape";

% Set output size.

layer.OutputSize = outputSize;

endWith this constructor function, the command projectAndReshapeLayer([4 4

512],Name="proj"); creates a project and reshape layer with name

"proj" that projects the input arrays to a batch of 512

4-by-4 images.

Create Initialize Function

Create the function that initializes the layer learnable and state parameters when the software initializes the network. Ensure that the function only initializes learnable and state parameters when the property is empty, otherwise the software can overwrite when you load the network from a MAT file.

To initialize the learnable parameter Weights, generate a random

array using Glorot initialization. To initialize the learnable parameter

Bias, create a vector of zeros with the same number of channels

as the input data. Only initialize the weights and bias when they are empty.

Because the size of the input data is unknown until the network is ready to use, you must create an initialize function that initializes the learnable and state parameters using networkDataLayout objects that the software provides to the function. Network data layout objects contain information about the sizes and formats of expected input data. Create an initialize function that uses the size and format information to initialize learnable and state parameters such that they have the correct size.

A project and reshape layer applies a fully connect operation to project the input to

batch of images. Initialize the weights using Glorot initialization and initialize the

bias with an array of zeros. The functions initializeGlorot and

initializeZeros are attached to the example Train Generative Adversarial Network (GAN) as supporting files.

To access these functions, open this example as a live script. For more information

about initializing learnable parameters for deep learning operations, see Initialize Learnable Parameters for Model Function.

function layer = initialize(layer,layout)

% layer = initialize(layer,layout) initializes the layer

% learnable parameters using the specified input layout.

% Layer output size.

outputSize = layer.OutputSize;

% Initialize fully connect weights.

if isempty(layer.Weights)

% Find number of channels.

idx = finddim(layout,"C");

numChannels = layout.Size(idx);

% Initialize using Glorot.

sz = [prod(outputSize) numChannels];

numOut = prod(outputSize);

numIn = numChannels;

layer.Weights = initializeGlorot(sz,numOut,numIn);

end

% Initialize fully connect bias.

if isempty(layer.Bias)

% Initialize with zeros.

layer.Bias = initializeZeros([prod(outputSize) 1]);

end

endCreate Forward Functions

Create the layer forward functions to use at prediction time and training time.

Create a function named predict that propagates the data forward

through the layer at prediction time and outputs the result.

The predict function syntax depends on the type of layer.

Y = predict(layer,X)forwards the input dataXthrough the layer and outputs the resultY, wherelayerhas a single input and a single output.[Y,state] = predict(layer,X)also outputs the updated state parameterstate, wherelayerhas a single state parameter.

You can adjust the syntaxes for layers with multiple inputs, multiple outputs, or multiple state parameters:

For layers with multiple inputs, replace

XwithX1,...,XN, whereNis the number of inputs. TheNumInputsproperty must matchN.For layers with multiple outputs, replace

YwithY1,...,YM, whereMis the number of outputs. TheNumOutputsproperty must matchM.For layers with multiple state parameters, replace

statewithstate1,...,stateK, whereKis the number of state parameters.

Tip

If the number of inputs to the layer can vary, then use varargin instead of X1,…,XN. In this case, varargin is a cell array of the inputs, where varargin{i} corresponds to Xi.

If the number of outputs can vary, then use varargout instead of Y1,…,YM. In this case, varargout is a cell array of the outputs, where varargout{j} corresponds to Yj.

Tip

If the custom layer has a dlnetwork object for a learnable parameter, then in

the predict function of the custom layer, use the

predict function for the dlnetwork. When you do

so, the dlnetwork object predict function uses the

appropriate layer operations for prediction. If the dlnetwork has state

parameters, then also return the network state.

Because a project and reshape layer has only one input and one output, the syntax for

predict for a project and reshape layer is Y =

predict(layer,X).

By default, the layer uses predict as the forward function at

training time. To use a different forward function at training time, or retain a value

required for a custom backward function, you must also create a function named

forward.

The dimensions of the inputs depend on the type of data and the output of the connected layers:

| Layer Input | Example | |

|---|---|---|

| Shape | Data Format | |

| 2-D images |

h-by-w-by-c-by-N numeric array, where h, w, c and N are the height, width, number of channels of the images, and number of observations, respectively. | "SSCB" |

| 3-D images | h-by-w-by-d-by-c-by-N numeric array, where h, w, d, c and N are the height, width, depth, number of channels of the images, and number of image observations, respectively. | "SSSCB" |

| Vector sequences |

c-by-N-by-s matrix, where c is the number of features of the sequence, N is the number of sequence observations, and s is the sequence length. | "CBT" |

| 2-D image sequences |

h-by-w-by-c-by-N-by-s array, where h, w, and c correspond to the height, width, and number of channels of the image, respectively, N is the number of image sequence observations, and s is the sequence length. | "SSCBT" |

| 3-D image sequences |

h-by-w-by-d-by-c-by-N-by-s array, where h, w, d, and c correspond to the height, width, depth, and number of channels of the image, respectively, N is the number of image sequence observations, and s is the sequence length. | "SSSCBT" |

| Features | c-by-N array, where c is the number of features, and N is the number of observations. | "CB" |

For layers that output sequences, the layers can output sequences of any length or output data with no time dimension.

Because the custom layer inherits from the nnet.layer.Formattable

class, the layer receives formatted dlarray objects with labels

corresponding to the output of the previous layer.

The forward function propagates the data forward through the layer

at training time and also outputs a memory value.

The forward function syntax depends on the type of layer:

Y = forward(layer,X)forwards the input dataXthrough the layer and outputs the resultY, wherelayerhas a single input and a single output.[Y,state] = forward(layer,X)also outputs the updated state parameterstate, wherelayerhas a single state parameter.[__,memory] = forward(layer,X)also returns a memory value for a custombackwardfunction using any of the previous syntaxes. If the layer has both a customforwardfunction and a custombackwardfunction, then the forward function must return a memory value.

You can adjust the syntaxes for layers with multiple inputs, multiple outputs, or multiple state parameters:

For layers with multiple inputs, replace

XwithX1,...,XN, whereNis the number of inputs. TheNumInputsproperty must matchN.For layers with multiple outputs, replace

YwithY1,...,YM, whereMis the number of outputs. TheNumOutputsproperty must matchM.For layers with multiple state parameters, replace

statewithstate1,...,stateK, whereKis the number of state parameters.

Tip

If the number of inputs to the layer can vary, then use varargin instead of X1,…,XN. In this case, varargin is a cell array of the inputs, where varargin{i} corresponds to Xi.

If the number of outputs can vary, then use varargout instead of Y1,…,YM. In this case, varargout is a cell array of the outputs, where varargout{j} corresponds to Yj.

Tip

If the custom layer has a dlnetwork object for a learnable parameter, then in

the forward function of the custom layer, use the

forward function of the dlnetwork object. When you

do so, the dlnetwork object forward function uses the

appropriate layer operations for training.

The project and reshape operation consists of a three operations:

Apply a fully connect operations with the learnable weights and biases.

Reshape the output to the specified output size.

Relabel the dimensions so that the output has format

'SSCB'(spatial, spatial, channel, batch)

Implement this operation in the predict function. The project and

reshape layer does not require memory or a different forward function for training, so

you can remove the forward function from the class file. Add a

comment to the top of the function that explains the syntaxes of the function.

function Y = predict(layer, X)

% Forward input data through the layer at prediction time and

% output the result.

%

% Inputs:

% layer - Layer to forward propagate through

% X - Input data, specified as a formatted dlarray

% with a 'C' and optionally a 'B' dimension.

% Outputs:

% Y - Output of layer forward function returned as

% a formatted dlarray with format 'SSCB'.

% Fully connect.

weights = layer.Weights;

bias = layer.Bias;

X = fullyconnect(X,weights,bias);

% Reshape.

outputSize = layer.OutputSize;

Y = reshape(X, outputSize(1), outputSize(2), outputSize(3), []);

% Relabel.

Y = dlarray(Y,'SSCB');

end

Tip

If you preallocate arrays using functions such as

zeros, then you must ensure that the data types of these arrays are

consistent with the layer function inputs. To create an array of zeros of the same data type as

another array, use the like option of zeros. For

example, to initialize an array of zeros of size sz with the same data type

as the array X, use Y = zeros(sz,like=X).

Because the predict function uses only functions that support

dlarray objects, defining the backward function is

optional. For a list of functions that support dlarray objects, see List of Functions with dlarray Support.

Completed Layer

View the completed layer class file.

classdef projectAndReshapeLayer < nnet.layer.Layer ... & nnet.layer.Formattable ... & nnet.layer.Acceleratable % Example project and reshape layer. properties % Output size OutputSize end properties (Learnable) % Layer learnable parameters Weights Bias end methods function layer = projectAndReshapeLayer(outputSize,NameValueArgs) % layer = projectAndReshapeLayer(outputSize) % creates a projectAndReshapeLayer object that projects and % reshapes the input to the specified output size. % % layer = projectAndReshapeLayer(outputSize,Name=name) % also specifies the layer name. % Parse input arguments. arguments outputSize NameValueArgs.Name = ''; end name = NameValueArgs.Name; % Set layer name. layer.Name = name; % Set layer description. layer.Description = "Project and reshape layer with output size " + join(string(outputSize)); % Set layer type. layer.Type = "Project and Reshape"; % Set output size. layer.OutputSize = outputSize; end function layer = initialize(layer,layout) % layer = initialize(layer,layout) initializes the layer % learnable parameters using the specified input layout. % Layer output size. outputSize = layer.OutputSize; % Initialize fully connect weights. if isempty(layer.Weights) % Find number of channels. idx = finddim(layout,"C"); numChannels = layout.Size(idx); % Initialize using Glorot. sz = [prod(outputSize) numChannels]; numOut = prod(outputSize); numIn = numChannels; layer.Weights = initializeGlorot(sz,numOut,numIn); end % Initialize fully connect bias. if isempty(layer.Bias) % Initialize with zeros. layer.Bias = initializeZeros([prod(outputSize) 1]); end end function Y = predict(layer, X) % Forward input data through the layer at prediction time and % output the result. % % Inputs: % layer - Layer to forward propagate through % X - Input data, specified as a formatted dlarray % with a 'C' and optionally a 'B' dimension. % Outputs: % Y - Output of layer forward function returned as % a formatted dlarray with format 'SSCB'. % Fully connect. weights = layer.Weights; bias = layer.Bias; X = fullyconnect(X,weights,bias); % Reshape. outputSize = layer.OutputSize; Y = reshape(X, outputSize(1), outputSize(2), outputSize(3), []); % Relabel. Y = dlarray(Y,'SSCB'); end end end

GPU Compatibility

If the layer forward functions fully support dlarray objects, then the layer

is GPU compatible. Otherwise, to be GPU compatible, the layer functions must support inputs

and return outputs of type gpuArray (Parallel Computing Toolbox).

Many MATLAB built-in functions support gpuArray (Parallel Computing Toolbox) and dlarray input arguments. For a list of

functions that support dlarray objects, see List of Functions with dlarray Support. For a list of functions

that execute on a GPU, see Run MATLAB Functions on a GPU (Parallel Computing Toolbox). To use a GPU for deep

learning, you must also have a supported GPU device. For information on supported devices, see

GPU Computing Requirements (Parallel Computing Toolbox). For more information on working with GPUs in MATLAB, see GPU Computing in MATLAB (Parallel Computing Toolbox).

In this example, the MATLAB functions used in predict all support

dlarray objects, so the layer is GPU compatible.

Include Custom Layer in Network

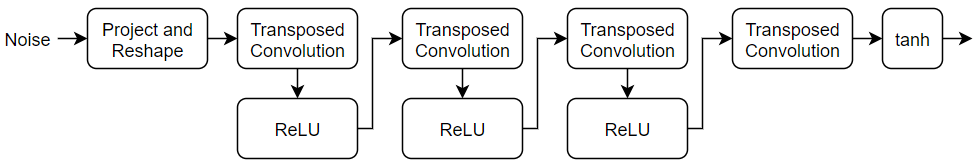

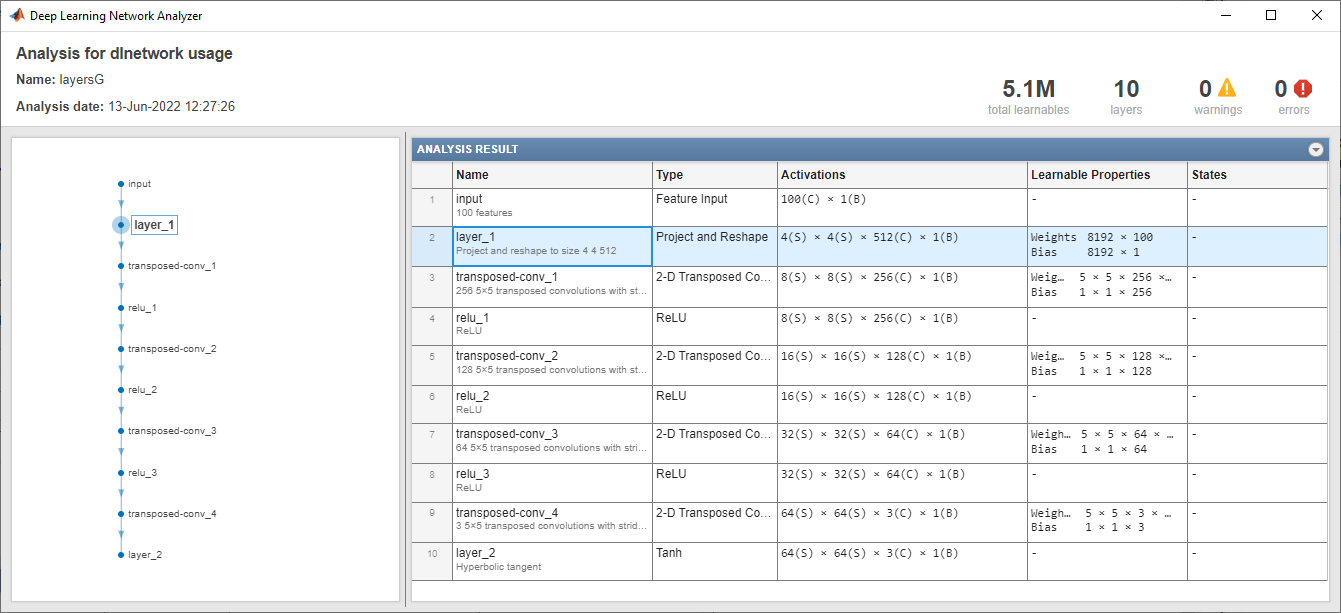

Define the following generator network architecture for a GAN, which generates images from 1-by-1-by-100 arrays of random values:

This network:

Converts the random vectors of size 100 to 7-by-7-by-128 arrays using a project and reshape layer.

Upscales the resulting arrays to 64-by-64-by-3 arrays using a series of transposed convolution layers and ReLU layers.

Define this network architecture as a layer graph and specify the following network properties.

For the transposed convolution layers, specify 5-by-5 filters with a decreasing number of filters for each layer, a stride of 2, and cropping of the output on each edge.

For the final transposed convolution layer, specify three 5-by-5 filters corresponding to the three RGB channels of the generated images, and the output size of the previous layer.

At the end of the network, include a tanh layer.

To project and reshape the noise input, use the custom layer projectAndReshapeLayer.

filterSize = 5;

numFilters = 64;

numLatentInputs = 100;

projectionSize = [4 4 512];

layersG = [

featureInputLayer(numLatentInputs,Normalization="none")

projectAndReshapeLayer(projectionSize);

transposedConv2dLayer(filterSize,4*numFilters)

reluLayer

transposedConv2dLayer(filterSize,2*numFilters,Stride=2,Cropping="same")

reluLayer

transposedConv2dLayer(filterSize,numFilters,Stride=2,Cropping="same")

reluLayer

transposedConv2dLayer(filterSize,3,Stride=2,Cropping="same")

tanhLayer];Use the analyzeNetwork function to check the size and format of the layer activations. To analyze the network for custom training loop workflows, set the TargetUsage option to "dlnetwork".

analyzeNetwork(layersG,TargetUsage="dlnetwork")

As expected, the project and reshape layer takes input data with format "CB" (channel, batch) and outputs data with format "SSCB" (spatial, spatial, channel, batch).

To train the network with a custom training loop and enable automatic differentiation, convert the layer array to a dlnetwork object.

netG = dlnetwork(layersG);

See Also

trainnet | trainingOptions | dlnetwork | dlarray | functionLayer | checkLayer | setLearnRateFactor | setL2Factor | getLearnRateFactor | getL2Factor | findPlaceholderLayers | replaceLayer | PlaceholderLayer | networkDataLayout

Topics

- Define Custom Deep Learning Layers

- Define Custom Deep Learning Layer with Learnable Parameters

- Define Custom Deep Learning Layer with Multiple Inputs

- Define Custom Recurrent Deep Learning Layer

- Specify Custom Layer Backward Function

- Define Custom Deep Learning Layer for Code Generation

- Define Nested Deep Learning Layer Using Network Composition

- Check Custom Layer Validity