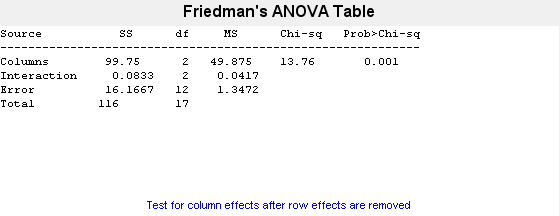

Friedman's test is similar to classical balanced two-way ANOVA, but it tests

only for column effects after adjusting for possible row effects. It does not test

for row effects or interaction effects. Friedman's test is appropriate when columns

represent treatments that are under study, and rows represent nuisance effects

(blocks) that need to be taken into account but are not of any interest.

The different columns of X represent changes

in a factor A. The different rows represent changes

in a blocking factor B. If there is more than one

observation for each combination of factors, input reps indicates

the number of replicates in each “cell,” which must

be constant.

The matrix below illustrates the format for a set-up where column

factor A has three levels, row factor B has two levels, and there

are two replicates (reps=2). The subscripts indicate

row, column, and replicate, respectively.

Friedman's test assumes a model of the form

where μ is an overall location parameter, represents the column effect, represents the row effect, and represents the error. This test

ranks the data within each level of B, and tests for a difference

across levels of A. The p that friedman returns

is the p value for the null hypothesis that . If the p value

is near zero, this casts doubt on the null hypothesis. A sufficiently

small p value suggests that at least one column-sample

median is significantly different than the others; i.e., there is

a main effect due to factor A. The choice of a critical p value

to determine whether a result is “statistically significant”

is left to the researcher. It is common to declare a result significant

if the p value is less than 0.05 or 0.01.

Friedman's test makes the following assumptions about the data

in X:

All data come from populations having the same continuous

distribution, apart from possibly different locations due to column

and row effects.

All observations are mutually independent.

The classical two-way ANOVA replaces the first assumption with

the stronger assumption that data come from normal distributions.