Simulink Deep Learning for Intel & ARM CPUs: Generate C++ Code Using Simulink Coder & Embedded Coder

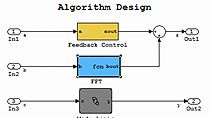

Learn how you can use Simulink® to design complex systems that include decision logic, controllers, sensor fusion, vehicle dynamics, and 3D visualization components.

As of Release 2020b, you can incorporate deep learning networks into your Simulink models to perform system-level simulation and deployment.

Learn how to run simulations of a lane and vehicle detector using deep learning networks based on YOLO v2 in Simulink on ARM® Cortex®-A and Intel® CPUs. The Simulink model includes preprocessing and postprocessing components that perform operations such as resizing incoming videos, detecting coordinates, and drawing bounding boxes around detected vehicles. With the same Simulink model, you can generate optimized C++ code using ARM Compute Library or Intel MKL-DNN (oneDNN) to target ARM Cortex-A and Intel CPUs.

Published: 18 Nov 2020

Simulink is a trusted tool for designing complex systems that include decision logic and controllers, sensor fusion, vehicle dynamics, and 3D visualization components. As of release 2020b, you can incorporate Deep Learning networks into your Simulink models to perform system level simulation and deployment. If we look inside the Vehicle Lane Detection subsystem, we'll see the use of two Deep Learning networks. Now the input video is going to go in. We'll do some preprocessing to resize the image.

From there, it's sent to the Lane Detector, and we can see the Lane Detector being defined in the mat file here. From there, we'll send it for some postprocessing to detect the coordinates and then draw left and right lanes in the output video. Now in parallel, the input video will also be sent to the second Deep Learning network, the yolov2 network, and here you can see that being defined in the mat file inside the MATLAB Function Block. From there, we'll send it again to do some annotations to draw bounding boxes around detected vehicles. So here's a simulation running on the CPU.

Frame rate is a little low, but the input video is on the left and the output video is on the right. And you can see that we're detecting the left and right lanes, highlighting it green, and drawing bounding boxes around vehicles that we see. Now, we're ready for code generation so we can launch either the Simulink Coder or the Embedded Coder apps. In this case, we'll start Embedded coder, and we will go into the configuration parameters. At the top, you'll see the appropriate system target file.

We set the language to C++ for Deep Learning networks, and towards the bottom here, you can see that we're making use of the Microsoft Visual C++ toolchain. Under Interface, this is where we can specify the Deep Learning network. Target libraries, and we can choose either MKL-DNN for Intel CPUs or ARM Compute library for ARM Cortex-A processors. So in this case, we'll keep MKL-DNN so that we can run this on our Intel CPU, and let's generate code. So here's the Code Generation report.

Let's first look for the step function. I'm scrolling down a little bit. Here you can see the part of the code that we're calling for an inference for the first of the two Deep Learning networks. Now let's take a look at how the Deep Learning networks are defined. Here's the one for LaneNet, and here you can see the set of public and private methods-- setup, predict, and clean up.

Now we can also take a look at the second Deep Learning network, the yolov2 network. And here we have a similar set of public and private methods-- set up, predict, additional activations, and cleanup. If we wanted to, we could look inside a setup, and this is loaded once at the beginning of the program. And here you can see the Deep Learning networks being loaded in one layer are at a time. And when we do each layer, we also loaded the weights and biases.

So that's a quick look at generating code from Deep Learning networks inside of Simulink for CPUs, such as Intel and ARM processors. For more information, take a look at the links below.