MATLAB, Simulink, and RoadRunner advance the design of automated driving perception, planning, and control systems by enabling engineers to gain insight into real-world behavior, reduce vehicle testing, and verify the functionality of embedded software. With MATLAB, Simulink, and RoadRunner, you can:

- Access, visualize, and label data

- Simulate driving scenarios

- Design planning and control algorithms

- Design perception algorithms

- Deploy algorithms using code generation

- Integrate and test

See How Others Use MATLAB, Simulink, and RoadRunner for Automated Driving

Access, Visualize, and Label Data

You can access live and recorded driving data using MATLAB interfaces for CAN and ROS. Using built-in tools, you can also visualize and label imported data. For example, the ground truth labeler app provides an interface to visualize and label multiple signals interactively, or you can automatically label these signals and export the labeled data to your workspace.

To access and visualize geographic map data, you can use HERE HD Live Maps and OpenStreetMap.

Video Tutorials:

Simulate Driving Scenarios

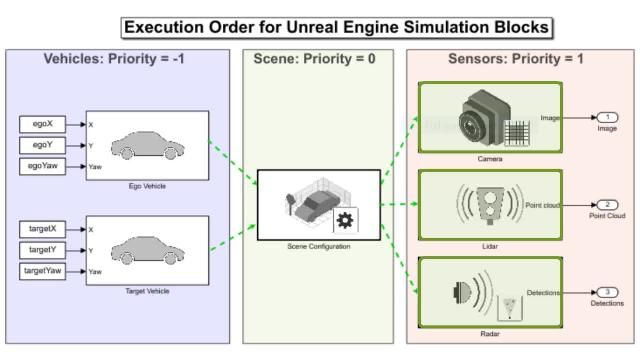

You can use cuboid and Unreal Engine simulation environments with MATLAB to develop and test algorithms in virtual scenarios.

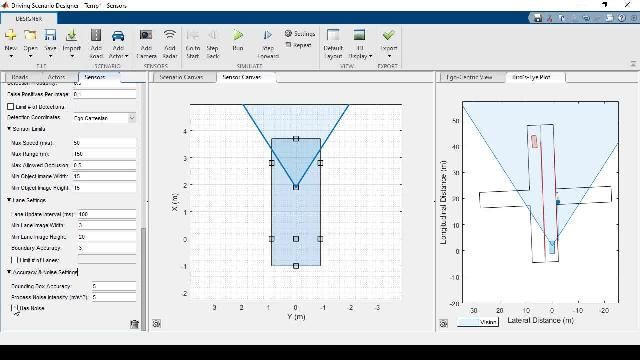

The cuboid environment represents actors as simple graphics and uses probabilistic sensor models. You can use this environment for controls, sensor fusion, and motion planning.

Using the Unreal Engine environment, you can develop algorithms for perception and the cuboid-environment use-cases. RoadRunner lets you design scenes to use with simulators, including CARLA, Vires VTD, and NVIDIA Drive Sim, as well as MATLAB and Simulink. RoadRunner also supports export to industry standard file formats like FBX and OpenDRIVE.

Video Tutorials:

Design Planning and Control Algorithms

Using MATLAB and Simulink, you can develop path planning and control algorithms. You can design vehicle control systems using lateral and longitudinal controllers that enable autonomous vehicles to follow a planned trajectory.

You can also test your algorithms synthetically using sensor models and vehicle dynamics models, along with 2D and 3D simulation environments.

Video Tutorials:

Design Perception Algorithms

You can develop perception algorithms using data from camera, lidar, and radar. Perception algorithms include detection, tracking, and localization, which you can use for applications like automatic braking, steering, map building, and odometry.

You can implement these algorithms as part of ADAS applications like emergency braking and steering.

Using MATLAB, you can develop algorithms for sensor fusion, simultaneous localization and mapping (SLAM), map building, and odometry.

Video Tutorials:

Deploy Algorithms

You can deploy perception, planning, and control algorithms to hardware using code generation workflows. Supported code generation languages include C, C++, CUDA, Verilog, and VHDL®.

You can also deploy algorithms to service-oriented architectures like ROS and AUTOSAR.

Using auto-generated code, you can connect sensors with other ECU components. Several deployment targets are supported, including hardware from NVIDIA, Intel, ARM, and more.

Integrate and Test

You can integrate and test your perception, planning, and control systems. Using Requirements Toolbox, you can capture and manage your requirements. You can also use Simulink Test to run and automate test cases in parallel.

Reference Applications

Use the examples below as a basis for designing and testing ADAS and automated driving applications.

- Adaptive Cruise Control with Sensor Fusion

- Lane Keeping Assist with Lane Detection

- Autonomous Emergency Braking with Sensor Fusion

- Forward Collision Warning Using Sensor Fusion

- Forward Collision Warning Application with CAN FD and TCP/IP

- Automated Parking Valet

- Lane Following Control with Sensor Fusion and Lane Detection

Can’t find what you’re looking for? Visit our Automated Driving documentation page for a full list of examples.