Sensor Fusion and Tracking for Autonomous Systems

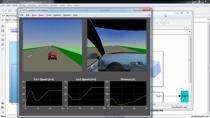

Autonomous systems are a focus for academia, government agencies, and multiple industries. These systems range from road vehicles that meet the various NHTSA levels of autonomy through consumer quadcopters capable of autonomous flight and remote piloting, package delivery drones, flying taxis, and robots for disaster relief and space exploration. In this talk, you will learn to design, simulate, and analyze systems that fuse data from multiple sensors to maintain position, orientation, and situational awareness. By fusing multiple sensors data, you ensure a better result than would otherwise be possible by looking at the output of individual sensors. Several autonomous system examples are explored to show you how to:

- Define trajectories and create multiplatform scenarios

- Simulate measurements from inertial and GPS sensors

- Generate object detections with sensor models

- Design multi-object trackers as well as fusion and localization algorithms

- Evaluate system accuracy and performance on real and synthetic data

Recorded: 6 Nov 2019